TTMI(2): Benefits from capacity slotting and USA reshoring, expanding in USA and Malaysia.

Policy tailwinds and rising CSP capex are accelerating AI infra. GB200 boosts PCB value; TTMI benefits from capacity slotting and USA reshoring, expanding in USA and Malaysia.

One Big Beautiful Bill Act

Lower federal corporate income tax from 35% to 21%, easing the overall tax burden on businesses.

Restore 100% expensing for capex (bonus depreciation) as in the 2017 Tax Cuts and Jobs Act—allowing full first-year deduction of qualified capital expenditures. This shortens payback periods for capital-intensive firms (e.g., major CSPs).

Full expensing of domestic R&D: To incentivize U.S. production and research, eligible R&D costs can be fully expensed in the year incurred, benefiting companies such as OpenAI and Anthropic.

America’s AI Action Plan

Accelerate AI innovation by ensuring the U.S. builds the most capable AI systems, removing unnecessary barriers and encouraging open-source.

Scale AI infrastructure by fast-tracking reviews for data centers, foundries, and power projects—clearing obstacles to capacity expansion.

Uphold diplomacy and AI security: shape global rule-setting, export U.S. AI capabilities, prevent leakage of advanced compute to competitors, and collaborate with allies—supporting the rise of Sovereign AI.

TTMI stands to benefit from this policy backdrop.

Big Four CSPs: Capex is accelerating compute build-outs

Microsoft (~30–40% to GPUs)

Q2 2025 capex of $24.2B, primarily for data-center build-outs.

Capex expected to moderate in 2026.

Google (~60% GPUs + TPUs / 40% networking)

Q2 2025 capex of $22.4B.

2025 capex guidance raised from $75B to $85B.

Meta

Q2 2025 capex of $17.0B, focused on servers and networking; also reflects higher procurement costs.

2025 capex lifted to $66–72B; 2026 growth comparable to 2025.

Amazon (>50% to AI)

Q2 2025 capex of $31.4B; Q3–Q4 to remain at similar levels.

2025 capex revised above the prior $105B expectation.

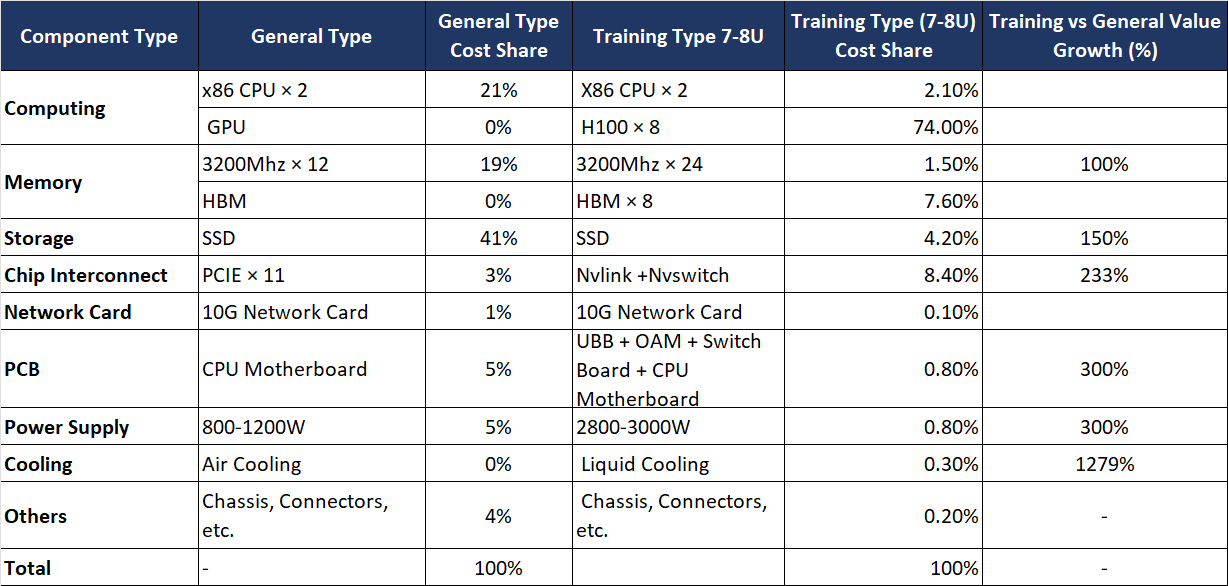

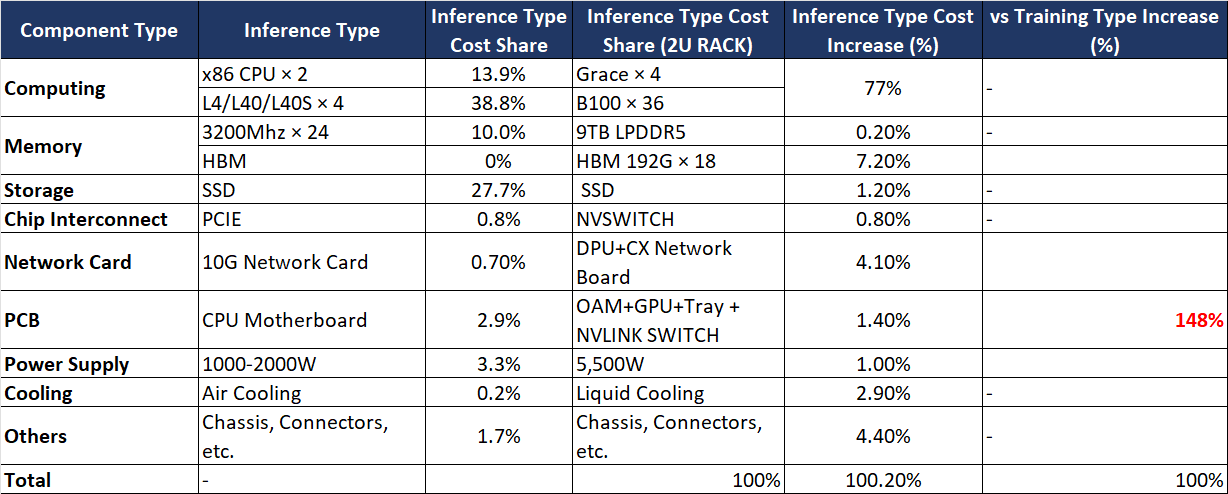

Server BOM snapshot: PCB share is modest—but value growth is striking

Type & price bands

General-purpose servers: $3,000–$15,000 (assume $9,500).

AI training: $200,000–$3,000,000 (assume $250,000; large clusters can run into the millions).

AI inference: $60,000 (typical build).

Training + inference hybrids: $1.5M–$3.0M, depending on scale and redundancy.

What drives PCB demand higher

Versus general-purpose boxes, AI systems add GPUs and push upgrades in storage, cooling (air → liquid), power (higher draw), and PCBs (layer count, thermal performance, reliability).

Under GB200 architectures, modular topologies like 4+9+5 / 8+9+10 increase PCB count and specs; for similar rack-level configurations, PCB value can be ~1–2× higher than in traditional training builds.

ASIC ecosystems need capacity slotting—and a shift back to North American manufacturing

TTMI is currently the largest PCB manufacturer in North America, with nearly 25% of revenue coming from data-center customers. Based on our research, downstream CSP leaders—AWS, Meta, OpenAI, Google, Microsoft, and NVIDIA—are pulling in more orders from TTMI to both diversify supply chains and increase North American production.

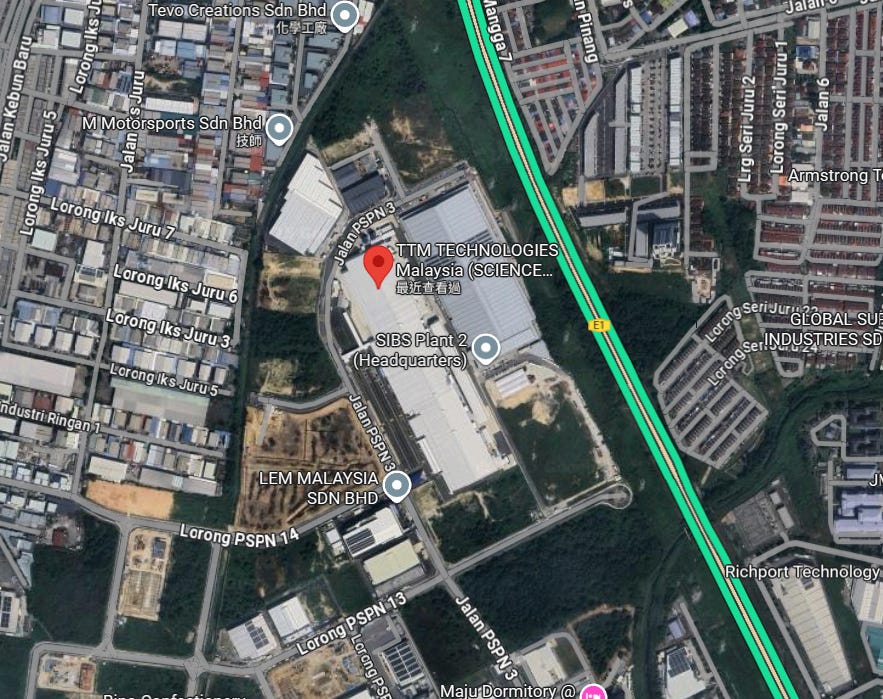

The company is expanding across North America and Malaysia:

Investing $500 million to build a new plant in Syracuse, New York.

Acquiring a 750,000 sq. ft. facility in Eau Claire, Wisconsin.

Adding 800,000 sq. ft. in Penang, Malaysia, and securing adjacent land to prepare for a second facility as demand continues to rise.

Notably, TTMI’s Malaysia site sits next to Taiwan Union Technology (TUC) in Penang, enabling tighter material and supply-chain coordination.