800V HVDC: Powering the Next Generation of AI Data Centers

Inside Nvidia's push to re-engineer data center power, moving from 48V to an 800V standard.

Recent Life

Hey everyone! I recently moved to Austin and am still getting used to everything—the Texas heat and the Austin traffic, but we’re adjusting pretty well so far. We also went to our very first F1 race last week, and it’s totally different from watching it on TV. The sound of the engines in person really gets your heart racing. Congrats to Max from the Red Bull team for taking the win that day!

This is my first long-form article diving deep into the industry. I’d love for more friends in this field to reach out via email and chat about your thoughts on data centers, energy, AI applications, or anything related—I’m really interested in all of it. In the future, I’ll be sharing more articles like this, along with some updates on my personal life.

Today we will explore the development trends and key points of 800V HVDC. Most of the information is based on public data and supply chain research. At the OCP conference held in October 2025, Nvidia released a paper titled “< 800 VDC Architecture for Next-Generation AI Infrastructure >,” which details the 800 VDC power architecture Nvidia is promoting to address the power consumption challenges of AI data centers.

Why is the 800V HVDC architecture needed, and why now?

In one sentence: The power consumption of AI servers is increasing, requiring a new power supply system to meet efficiency needs.

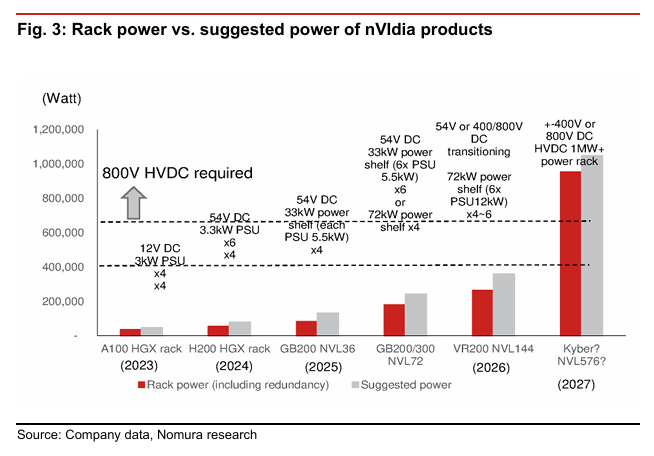

The current mainstream power solution involves 33kW and 72kW power shelves placed in IT racks (using 48-54V [approx. 50V] DC as the intermediate bus voltage). As GPU power consumption soars (e.g., the Blackwell GPU requires 130 DrMOS chips), this architecture suffers from excessive current when transmitting 48V, leading to severe power loss and requiring a large amount of copper cabling, making it unsustainable.

A simple calculation: powering a 1MW rack requires 200 kg of copper busbars. A 1GW data center (about 1000 racks) would cumulatively need 500,000 tons of copper, which is completely unfeasible in terms of cost and physical space.

Therefore, the NVIDIA technology blog published an article on May 20th this year titled: NVIDIA 800 VDC Architecture Will Power the Next Generation of AI Factories. This was the first time they introduced their plan to transition data center power infrastructure to 800 VDC to the public.

The old approach was for each rack to do its own power conversion. AC power would come in from the outside, be converted to 48V DC by PSUs (Power Supply Units) at the bottom, and then converted again to the IV (Intermediate Voltage) used by the chips.

This method faced several problems: each GPU board had hundreds of power chips (DrMOS), which consumed a lot of power, got very hot, and used too much copper wire—amounting to about 200 kg of copper for an entire cabinet.

The new architecture is called HVDC (High Voltage Direct Current). It centralizes the AC to 800V DC conversion, sends it to the server rows, and only steps down the voltage near the chips. This largely borrows from electric vehicle (EV) charging technology, making it cheaper and faster to deploy.

The advantages of this are:

Improved Efficiency: At the same power level, increasing the voltage to 800V significantly reduces the current ($P=VI$), thereby drastically cutting power loss during transmission.

Cost Savings: Lower current means less copper is needed, saving on material costs.

Mature Technology: This 800V architecture heavily borrows from electric vehicle (EV) charging technology, making its deployment cheaper and faster.

At the same time, the biggest risk is the centralized Single Point of Failure (SPOF): If this centralized high-voltage PSU fails, it could cause a large number, or even an entire row, of cabinets to go down. Therefore, redundant design is crucial, which also increases the demand for BBUs (Battery Backup Units).

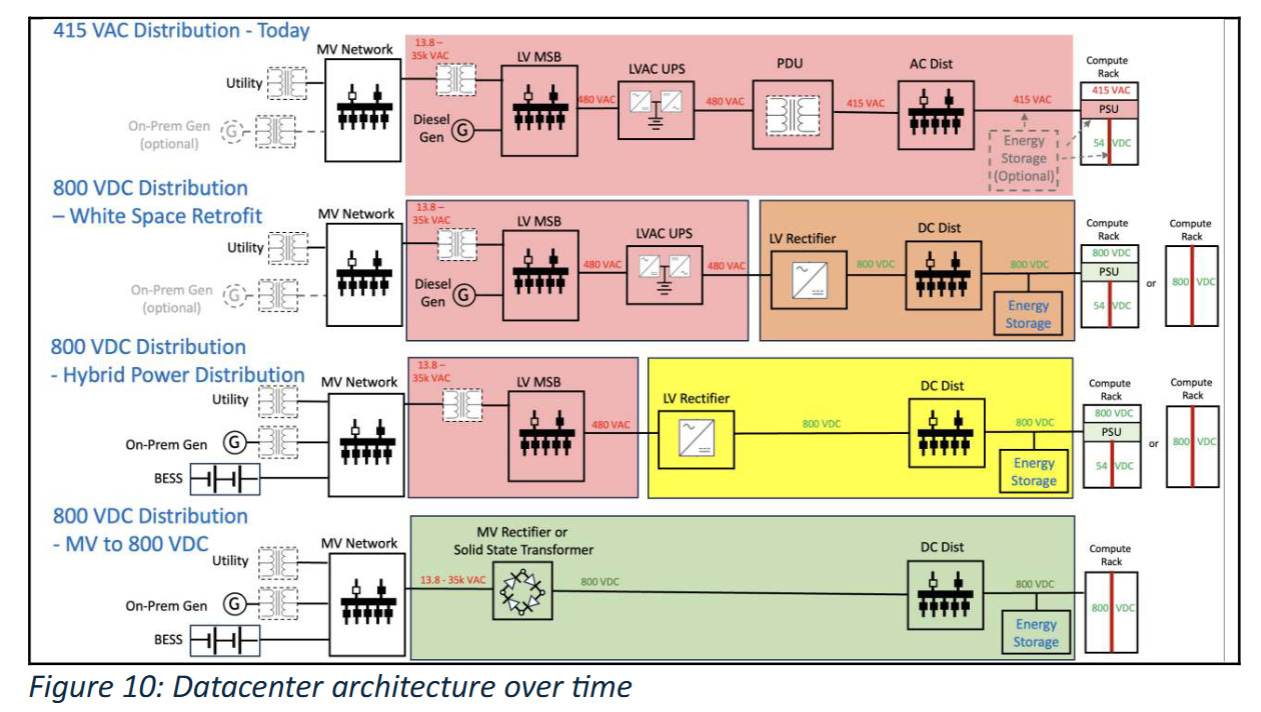

According to Nvidia’s 800V HVDC white paper, four levels of power transmission and evolutionary stages are outlined, including two solutions:

Power Rack (stepping up voltage from 480V to 800V) + Power Supply Unit (PSU) (stepping down 800V to...)

Solid State Transformer (SST), used to perform 10k-33kVAC to 800VDC conversion.

Nvidia’s current hope is that solution (2) will be the final one implemented. This would completely eliminate many components of the traditional LV AC level (like LV switchgear), achieving the most streamlined, efficient, and reliable “Grid to Rack” power chain to support AI factories with power densities of 1 MW or higher.

Calculating the Number of CSP Power Racks from Data Centers

From the industry’s TAM perspective, OpenAI (OAI) announced that Stargate will invest $400B between 2025-2028 to build 7GW of computing power. OAI has also declared plans with NVDA/AMD/AVGO to build 10GW/6GW/10GW of computing power, totaling 26GW.

One GW requires approximately 1,000 800 VDC power racks (1 GW ÷ 1 MW/rack = 1,000). Based on OAI’s plan, this would require about 26,000 800 VDC power racks. Of course, this is the most optimistic scenario, giving us a ceiling for estimation.

HVDC is basically an architecture designed specifically for the NVL576. The volume will depend on the demand for the NVL576.

However, this isn’t necessarily the case for ASICs. It depends on whether individual CSPs want to adopt it. The author believes that in the long run, everyone will adopt it; it’s just a matter of pace. Because the NVIDIA ecosystem has now defined 800 VDC as the common standard for AI Factories, if AWS adopts the latest NVIDIA reference architecture (or an equivalent self-developed solution), it is highly probable they will ultimately use 800 VDC (or a hybrid form).

According to research, it is currently known that Meta will need 4,000 racks in the next 2 years, and Google will need 6,000 racks in the next 2 years.

Deep dive into each element of the HVDC power rack

Currently, there are two types of HVDC power racks: the OCP (Open Compute Project) standard and the NVIDIA standard 800V high-voltage DC power rack.

OCP (±400V): A transitional or compatibility solution. Led by Cloud Service Providers (CSPs), it uses a positive and negative 400V output, which can adapt to existing 400V equipment while also supporting 800V.

NVIDIA (Monopolar 800V): A direct-to-800V solution. The goal is to convert to 800V DC right from the substation and directly power future MW-level AI racks, pursuing higher end-to-end efficiency.

From this point, we begin to quantify the impact of this specification change on the supply chain.

Calculating the Number of CSP Power Racks from Data Centers

From the industry’s TAM perspective, OpenAI (OAI) announced that Stargate will invest $400B between 2025-2028 to build 7GW of computing power. OAI has also declared plans with NVDA/AMD/AVGO to build 10GW/6GW/10GW of computing power, totaling 26GW.

One GW requires approximately 1,000 800 VDC power racks (1 GW ÷ 1 MW/rack = 1,000). Based on OAI’s plan, this would require about 26,000 800 VDC power racks. Of course, this is the most optimistic scenario, giving us a ceiling for estimation.

HVDC is basically an architecture designed specifically for the NVL576. The volume will depend on the demand for the NVL576.

However, this isn’t necessarily the case for ASICs. It depends on whether individual CSPs want to adopt it. The author believes that in the long run, everyone will adopt it; it’s just a matter of pace. Because the NVIDIA ecosystem has now defined 800 VDC as the common standard for AI Factories, if AWS adopts the latest NVIDIA reference architecture (or an equivalent self-developed solution), it is highly probable they will ultimately use 800 VDC (or a hybrid form).

According to research, it is currently known that Meta will need 4,000 racks in the next 2 years, and Google will need 6,000 racks in the next 2 years.

Deep dive into each element of the HVDC power rack

Currently, there are two types of HVDC power racks: the OCP (Open Compute Project) standard and the NVIDIA standard 800V high-voltage DC power rack.

OCP (±400V): A transitional or compatibility solution. Led by Cloud Service Providers (CSPs), it uses a positive and negative 400V output, which can adapt to existing 400V equipment while also supporting 800V.

NVIDIA (Monopolar 800V): A direct-to-800V solution. The goal is to convert to 800V DC right from the substation and directly power future MW-level AI racks, pursuing higher end-to-end efficiency.

Supply Chain Players and Estimates

Nvidia’s currently announced 800V HVDC partners include:

Silicon providers: Analog Devices, Infineon, Innoscience, MPS, Navitas, OnSemi, Renesas, ROHM, STMicroelectronics, Texas Instruments

Power system components: Delta, Flex Power, Lead Wealth, LiteOn, Megmeet

Data center power systems: Eaton, Schneider Electric, Vertiv

GB300 BBU becomes standard, suppliers include Shunda, Sinpro, AESKY.

Semiconductors: GaN, SiC. NVTS, Innoscience

According to the latest NVTS conference call, the company expects its AI data center business to see significant growth starting from the end of 2026.

Innoscience predicts the global GaN power semiconductor industry will have a compound annual growth rate (CAGR) as high as 98.5% from 2024 to 2028, mainly due to collaboration with the NVIDIA ecosystem to bring AI infrastructure into the Megawatt era.

However, because Innoscience is a Chinese company, I personally believe NVTS will benefit more than Innoscience

Power Whip usage increases significantly, Value increases by ~3.1x:

One NVL576 unit will use about 100 Power Whips (NVL 72 only has 8). The reason is Kyber’s shift to 800V HVDC side-mounted power and a single-rack design power of about 600 kW. Industry data from OCP/GTC shows that a large number of flexible, high-current whips will be used for cabling; multiple industry presentations directly give a usage of ≈100 whips/rack.

Power Supplies: Delta, Vertiv

According to foreign investment estimates, the Average Selling Price (ASP) of a Power Rack is about $30,000 USD (excluding PSUs, assuming one power rack supports one compute rack). Based on Delta’s average gross margin for assembly products over the past decade, it is expected that Delta’s gross margin (GM) on this product will be at least 30%.

Competition

From an HDVC market share perspective, Delta currently holds 60-65% and Vertiv 20%. The difference is because Delta provides the most complete solution: an integrated Solid State Transformer (SST) + PSU + PDU + BBU product, with an efficiency of 98.5% (ranked #1 in reviews), making it the only “Grid to Chip” architecture provider.

The most critical part is the Solid State Transformer (SST). From NV’s roadmap, we can see they hope to completely eliminate many components of the traditional LV AC level (like LV switchgear), achieving the most streamlined “Grid to Rack” path.

In Delta’s SST layout, the key is its cooperation with the US Department of Energy (DOE) since the rise of EVs in 2022, directly participating in the drafting of the UL + IEEE C57.16 SST standard, making it the sole Asian technical review representative.

What is UL + IEEE C57.16 SST Standard Draft ?

C57.16 was originally used to regulate the safety structure and testing methods of traditional transformers, such as voltage tolerance, thermal design, and magnetic interference. Now, it adds the new application of “SST (Solid State Transformer),” which means replacing traditional coil and iron core designs with electronic chips like SiC and GaN to convert 13.8 kV to 800 V DC for high-voltage direct current transmission.

Basically, whoever can get the UL + IEEE C57.16 certification can enter the international power grid and AI data center supply chain. Early participants include Delta, Eaton, and Siemens.

< 800 VDC Architecture for Next-Generation AI Infrastructure >

Conclusion

Most optimistic about 3665 BizLink, 2308 Delta Electronics

Referencing Goldman Sachs estimates, Delta’s 2026/2027/2028 EPS are 40/70/117, respectively. The most critical factor for the increase is the gross margin, reaching 42% in ‘27.

Estimating BizLink-KY 2026/2027 EPS at 60/75, respectively.

HVDC architecture significantly reduces TCO: It improves energy efficiency, reduces equipment investment, saves space, and lowers maintenance costs. A full transition to HVDC power supply could reduce a data center’s TCO by approximately 20% to 30%. Therefore, NV will actively introduce HVDC in the subsequent Rubin Ultra.

Delta’s upside potential lies in the combined gross margin of PSU, PDU, and BBU increasing to 40%-45% or more. The market share for HVDC is expected to rise to over 70%. (Currently, Delta has 60-65%, Vertiv 15-20%).

BizLink’s Power Whip usage increases significantly, Value increases by ~3.1x: One NVL576 unit will use about 100 Power Whips (NVL 72 only has 8). The reason is Kyber’s shift to 800V HVDC side-mounted power and a single-rack design power of about 600 kW.

Stock momentum support: BizLink’s overseas convertible bond, issue size: 300 million USD, conversion price: 1423.5 NTD (30% premium).