I’ve tried to break down next year’s ASIC trends and supply chain outlook in this post. Just wanted to share my findings with you all! If I got anything wrong, please let me know. I’d love to correct it so we can all grow and learn together

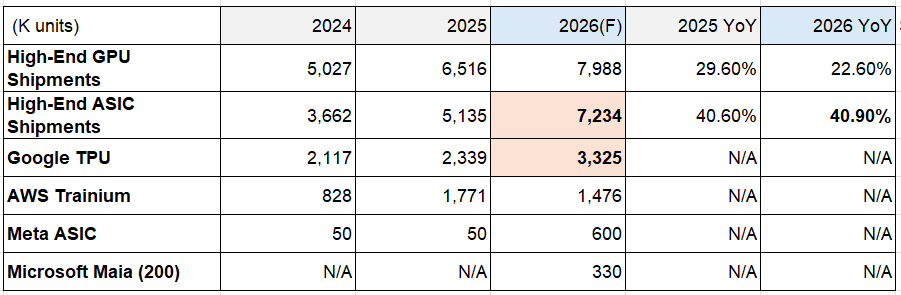

The trajectory for high-end AI ASIC chips suggests they will become the next critical growth engine for the AI server supply chain, surpassing high-end GPU growth in terms of percentage increase from 2025 through 2026.

Key Market Momentum and Forecasts

High-end ASIC chip shipments are projected to experience significant year-over-year (YoY) growth, exceeding 40% in both 2025 and 2026.

• ASIC Growth Superiority: The growth momentum of high-end ASIC chips is notably superior to high-end GPUs. High-end ASIC YoY growth is projected at 40.6% in 2025 and 41-43% in 2026, compared to high-end GPU YoY growth of 30% and 23% respectively.

• 2026 Shipment Volume: Total high-end ASIC shipments are forecasted to reach 7.234 million units in 2026.

• Primary Driver: Google’s TPU chips are identified as the strongest growth engine for ASIC shipments, continuing to lead the volume share among ASIC chips. Google’s TPU shipments are projected to reach 3.325 million units in 2026, showing an increase of nearly 1 million units compared to 2025.

--------------------------------------------------------------------------------

Google TPU (The Strongest Driver)

Google’s TPU supply chain is a key area of focus for 2026.

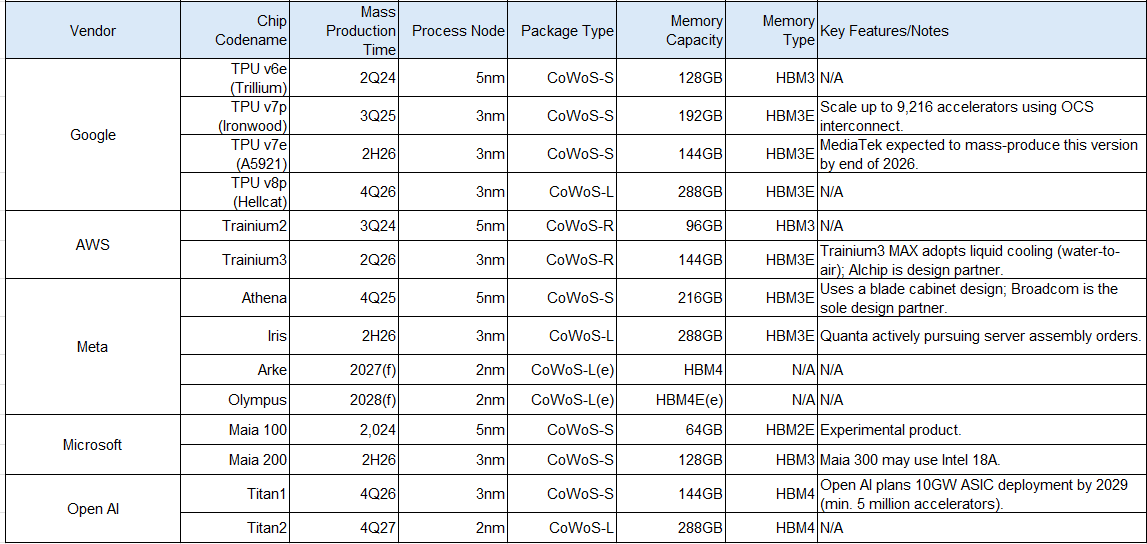

• TPU v7p (Ironwood): This mainstream version is scheduled for mass production in 3Q25. It will use the 3nm process and 192GB HBM3E memory.

◦ Architecture: It maintains the classic 64-accelerator design and utilizes the HLC architecture, with four accelerators per single motherboard.

◦ Scale-up: The scale-up range will be expanded to 9,216 accelerators across 144 cabinets. This expansion relies on the OCS (Optical Interconnect) architecture, which provides a unidirectional bandwidth of 600GB/s.

• Future Planning: The TPU v7e (A5921) is scheduled for mass production in 2H26. MediaTek (聯發科) is expected to mass-produce the TPU v7e by the end of 2026.

• Supply Chain: Amkor is anticipated to enter the TPU advanced packaging supply chain in 2H26.

AWS Trainium

AWS Trainium shipments are expected to decline in 2026 compared to 2025, as the platform faces a product transition period.

• 2026 Shipment: Shipments are estimated at 1.476 million units in 2026.

• Trainium3: This version is expected to enter mass production in 2Q26, utilizing the 3nm process and 144GB HBM3E memory.

• Trainium3 MAX: This server system will debut a liquid cooling architecture (water-to-air cooling solution). The architecture is similar to NVL72, featuring four ASICs per computing board.

◦ Interconnect: AWS is collaborating with Astera Labs on the Scale-up solution to achieve Any-to-Any interconnectivity.

◦ Future Outlook: Given the close partnership between AWS and Astera Labs (a major promoter of UALink), the next-generation Trainium4 server is speculated to adopt the UALink architecture, which is projected to launch its first solution in 2027.

• Supply Chain: Alchip is the main chip design partner for Trainium3. Wiwynn and QCT are responsible for the front and back-end server assembly.

Meta ASIC (Athena, Iris)

Meta’s high-end AI ASIC products are expected to achieve volume shipments in 2026.

• 2026 Shipment: Shipments are projected to reach 600,000 units in 2026, a significant increase from the 50,000 units shipped in 2025.

• Athena: Scheduled for mass production in 4Q25 (5nm, 216GB HBM3E). The chip will utilize a blade server cabinet design.

• Iris: Scheduled for mass production in 2H26 (3nm, 288GB HBM3E).

• Roadmap: Meta has a rapid product roadmap, including Arke (2027f, 2nm, HBM4) and Olympus (2028f, 2nm, HBM4E(e)).

• Supply Chain: Broadcom (博通) is the sole design partner for Meta ASIC, supplying the complete accelerator interconnect and networking solutions (including Tomahawk 5/6 and Tomahawk Ultra). Quanta (廣達) is actively vying for the Iris server back-end assembly orders.

Microsoft Maia

Microsoft’s next-generation Maia 200 is set for mass production in the second half of 2026.

• Maia 100: This was an experimental product, with combined shipments of 23,000 units across 2024 and 2025.

• Maia 200: Expected to enter mass production in 2H26, with an estimated full-year shipment of 330,000 units. It uses the 3nm process and 128GB HBM3 memory.

• Future Plans: The subsequent Maia 300 may potentially adopt Intel 18A process and advanced packaging technology.

Open AI

Open AI has announced an ambitious long-term ASIC deployment plan.

• 10GW Plan: Open AI declared on October 13, 2025, a plan to deploy 10GW of ASIC by the end of 2029, estimated to total at least 5 million accelerators.

• Titan1: The first-generation AI ASIC (Titan1) is projected for mass production in 4Q26 (3nm, 144GB HBM4).

• Titan2: Projected for mass production in 4Q27 (2nm, 288GB HBM4)

Table 1: High-End AI Accelerator Shipment Volume and Forecast (2024–2026) (Units: Thousands)

Table 2: Key ASIC Accelerator Specifications and Planning (2024–2028)

Source:DIGITIMES

Where is Marvell's place in this heirarchy? I assumed that they had a pretty solid #2 spot for ASICs but it does not seem so anymore?