Weekly Shots of Insight and Market #3

Buffett’s last shareholder letter, PCB Upstream, AMD Analyst Day, GB300 NVL72 MLCommons MLPerf Test Report

Life

In Buffett’s last shareholder letter, the sentence that touched me the most was: “Keep in mind that the cleaning lady is as much a human being as the Chairman.” Having been in the workplace for a long time, I often need to remind myself to have empathy. When you have empathy, kindness, respect, and gratitude, your perspective on things will gradually change, and these are things you can’t buy with all the money, power, or fame.

In the past, I sometimes focused too much on profit and loss at work, often overlooking the things around me. I was also often reminded by my wife, but frequently caring for the needs of those around me and helping others is more important than performance.

I was late in becoming old – its onset materially varies – but once it appears, it is not to be denied. To my surprise, I generally feel good. Though I move slowly and read with increasing difficulty, I am at the office five days a week where I work with wonderful people. Occasionally, I get a useful idea or am approached with an offer we might not otherwise have received. Because of Berkshire’s size and because of market levels, ideas are few – but not zero.

Yes, even the jerks; it’s never too late to change. Remember to thank America for maximizing your opportunities. But it is – inevitably – capricious and sometimes venal in distributing its rewards. Choose your heroes very carefully and then emulate them. You will never be perfect, but you can always be better. ”

Buffett is really a workaholic, but despite being 95 years old, he can still work happily and enjoy it, which is truly amazing. Thank you, Buffett, you are my hero in investing, business, and how to treat people. I wish I could be like him too.

I’m happy to say I feel better about the second half of my life than the first. My advice: Don’t beat yourself up over past mistakes – learn at least a little from them and move on. It is never too late to improve. Get the right heroes and copy them.

Warren Buffett's final letter to shareholders

Warren Buffett’s 1999 speech at Sun Valley

Looking at history, I think it’s worth revisiting Warren Buffett’s 1999 speech at Sun Valley, which was compiled by Fortune into the article “Mr. Buffett on the Stock Market.” It mentioned several points that we are paying special attention to in the current industry cycle:

New technology doesn’t automatically equal high investment returns. Long-term returns must revert to first principles: Long-term return = Dividend Yield + Earnings Growth ± Change in Valuation.

He used the auto and airline industries as examples: The automobile was one of the most important inventions of the first half of the 20th century, but of the hundreds of car manufacturers at the time, only a few survived. Investors as a whole lost heavily. Aviation changed the world, but airlines also had poor long-term returns.

Excellent industry prospects do not guarantee good shareholder returns; competition, capital expenditures, excessive stock issuance, bankruptcies, etc., can erode economic value. (This is somewhat similar to the present, though today’s situation is a bit more complex.)

The cash flows of the tech giants investing in AI infrastructure today are different from those in 1999. From a cash flow valuation perspective, we feel they are relatively safer. However, we believe the “debt” aspect might be a long-term risk to watch.

AI infrastructure will continue to change the world. Besides investing, I think we are more interested in using these tools to experiment with some things. (We will announce this part later.)

Summary: We agree that AI, like the previous internet revolution and great inventions such as the automobile, airplane, and radio, will change the world.

Even a profound industrial revolution does not guarantee huge returns for early investors.

Using the auto and aircraft manufacturing industries as examples, he pointed out that although these industries had enormous growth potential early on, only a few companies ultimately survived.

In 1999, despite the huge impact of the internet, investors still needed to carefully pick winners and couldn’t rely solely on macro trends. Many companies that led early in the revolution eventually failed.

Market

There were also several company earnings calls and technical articles this week.

PCB Upstream

Insightology View: Upstream fiberglass cloth continues to benefit from strong AI demand. However, we are closely monitoring capacity expansion timelines. Historically, such expansions tend to slow the price growth of upstream materials. For investors, slowing price growth implies that the potential upside for P/E ratios may be limited.

In the current AI materials market, there are shortages not only in memory but also in substrates. The substrate shortage is driven by upstream materials, including T-glass fiberglass cloth and copper foil. The primary producer of T-glass is Japan’s Nittobo (TYO: 3110), and the main supplier of copper foil is Mitsui Kinzoku.

Major Japanese tech-related companies Nittobo (3110.T) and Mitsui Kinzoku (5706.T) recently released their latest financial reports (for the period ending September 2025). Their results were contrasting: one company’s profit forecast surged, while the other’s net profit appeared to be halved. But the devil is in the details. Let’s break it down:

Nittobo (3110.T)

Operating profit for the first half increased by 29%. The company significantly raised its “full-year net profit” forecast, now expecting an increase of +192.1%! Its products can be broken down into two main growth drivers:

T-Glass

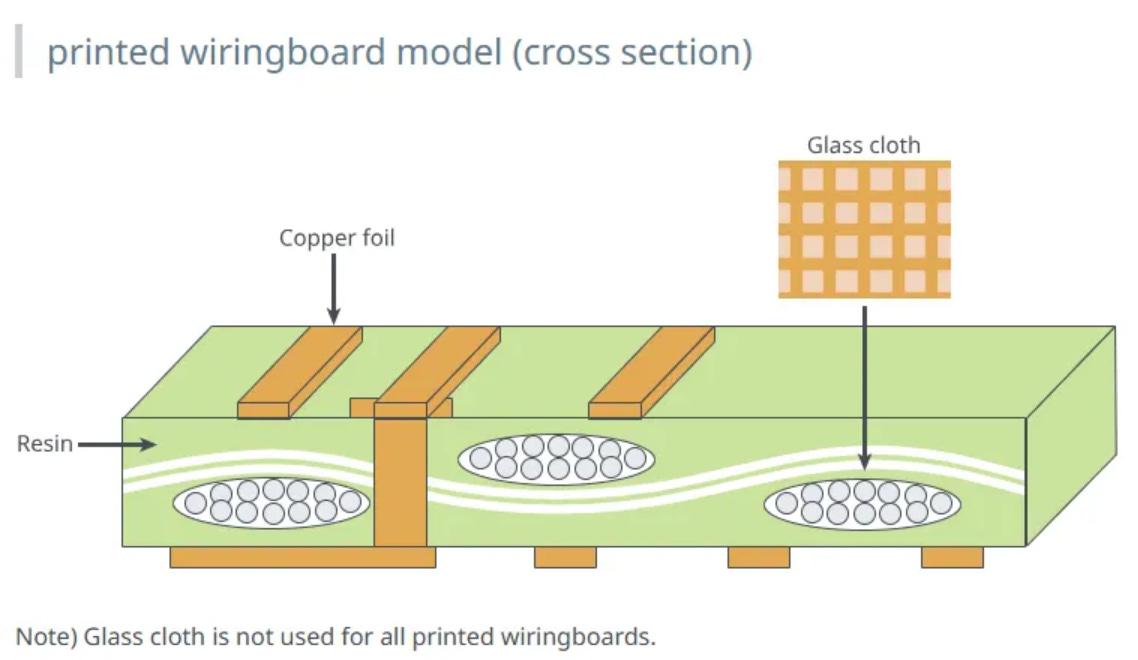

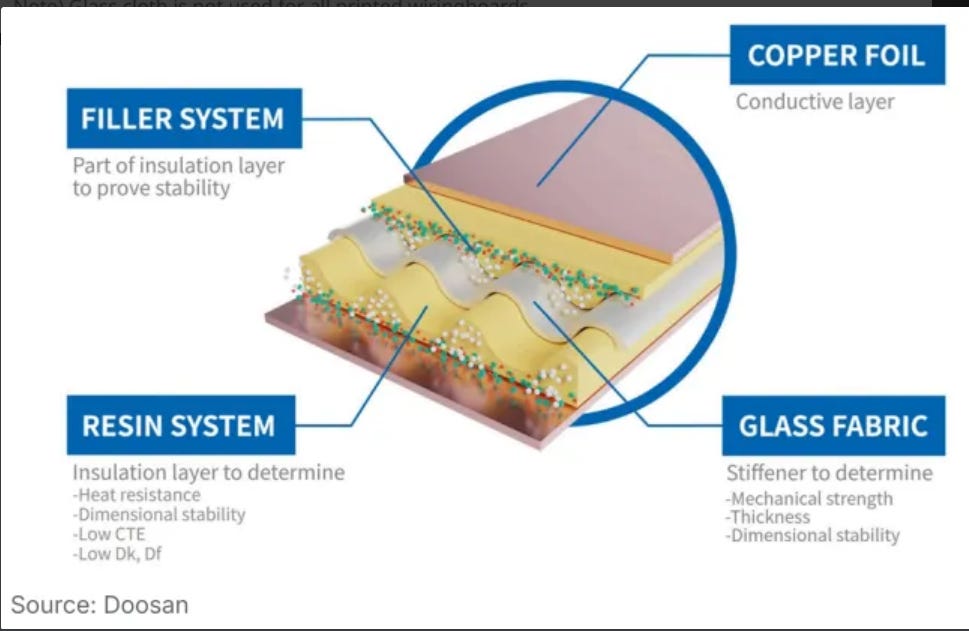

Introduction: T-Glass is a type of “foundation” that carries the chip (a key material for IC substrates). It ensures the chip does not deform under extreme heat or cold, maintaining absolute stability.

As AI chips become more powerful and are stacked in multiple layers (e.g., advanced packaging like CoWoS), they require a more robust and stable “foundation.” T-Glass has consequently shifted from being an “optional component” to a “standard requirement,” with demand 100% tied to the advanced packaging needs of AI chips.

NE-Glass

Introduction: NE-Glass is a glass cloth that allows electronic signals to “run faster with less interference.” AI servers transmit massive amounts of data internally. If standard materials are used, signals lose significant energy and speed, and are prone to “crosstalk” (interference).

The job of NE-Glass is to serve as the material for AI server motherboards (PCBs) and switches, ensuring the vast data required for AI computing can be transmitted at high speed and losslessly.

Transmission Speed Upgrades: Upgrading from PCIe 4.0 to 5.0 and 6.0, and from 400G switches to 800G and 1.6T, requires the use of lower dielectric (Low-Dk) NE-Glass materials at every step.

Increase in AI Server Penetration: Traditional servers can use lower-grade materials, but AI servers must use high-speed materials of NE-Glass’s caliber. The greater the shipment volume of AI servers, the stronger the demand for NE-Glass.

Oligopolistic Market: Likewise, this is an oligopolistic sector where Nittobo commands key technology.

Nittobo’s Strategy: “Invest heavily in capacity expansion.”

Specific Actions: The company has announced an investment of tens of billions of yen (recent reports suggest ~15 billion yen) for a large-scale expansion, primarily focused on its factory in Taiwan.

Timeline: New capacity is expected to begin ramping up at the end of 2026 and be fully released in 2027-2028. The goal is to triple production capacity to meet the explosive demand “strongly forecasted by customers” over the next few years.

Mitsui Kinzoku (5706.T)

Mitsui Kinzoku’s latest capacity expansion plans for its two major AI copper foil product lines:

VSP™ (HVLP Copper Foil) - Used for AI Server Motherboards

Announced an “additional capacity expansion” for VSP™ (HVLP Copper Foil) used in AI servers, indicating that end-user demand has far exceeded the company’s previous expectations.

Additional Investment: Announced an additional investment of approximately 6 billion yen.

Expansion Locations: Taiwan factory (Taiwan Copper Foil Co., Ltd.) and Malaysia factory.

New Target: To further significantly increase the total monthly production capacity of VSP™ from 840 tons in September 2026 to 1,200 tons, expected to be completed by the end of September 2028.

Microthin™ (Copper Foil with Carrier) - Used for IC Substrates

This is Mitsui Kinzoku’s flagship product with the highest technical barrier and profitability, used in advanced packaging such as the ABF substrates required for AI chips (GPUs).

Current Plan (Announced January 2025):

Expansion Locations: Ageo Plant (Saitama Prefecture), Japan, and the Malaysia factory.

Target: Plans to gradually increase production capacity, aiming to raise monthly capacity from 4.9 million square meters at the beginning of 2025 to 5.6 million square meters.

Timeline: According to the previous plan, the target is to be achieved between 2027 and 2030.

Insightology View: Although the expansion plan for Microthin™ isn’t being “topped up” as frequently as VSP™, it remains a stable focus of the company’s capital expenditure. In the latest earnings call, the company confirmed that demand for Microthin™ remains “solid” and is another major pillar of AI demand.

AMD Analyst Day

Insightology View

Actually, most of the technology roadmaps discussed at the analyst meeting were things we were somewhat aware of from our research. What surprised me more was the increase in the total addressable market (TAM) to $1 trillion.

And the idea that “compute equals intelligence.” If intelligence is infinite, then the demand for compute is infinite. I agree with this view, but growth will still have cycles, though the wavelength of this cycle might be longer than previous ones.

The data center compound annual growth rate (CAGR) will reach 60% over the next 3 to 5 years. (This estimate seems a bit high, which makes us slightly apprehensive, as it implies some years will be exceptionally good, while others might be slightly weaker.)

CEO Lisa Su pointed out at the meeting that the growth in AI infrastructure demand will not level off but will remain strong. Customer investment in AI is not “stabilizing.” AMD expects its sales growth to accelerate over the next five years, especially in: AI inference.

A Few Numbers

Data center CAGR of 60% over the next 3 to 5 years. (This seems a bit high, which makes us slightly apprehensive.)

Core business CAGR will exceed 10%.

Overall revenue annual growth rate will exceed 35%.

Operating profit margin will exceed 35%.

Earnings per share (EPS) will exceed $20, higher than market analyst expectations.

The total data center market size will reach $1 trillion by 2030.

Partnerships

OpenAI: Announced a five-year, 6 GWscale strategic partnership. AMD has optimized the MI450 series for many of OpenAI’s use cases, including optimizing inference.

Oracle: Expanded their partnership. Oracle will be one of the first companies to offer public instances of the MI450, expected to scale starting in Q3 2026.

Meta: The partnership has expanded to include the MI300 and MI350, with deep collaboration on open standards for the Helios rack-scale solution.

U.S. Department of Energy (DOE): Will adopt features from the MI400 series, including the new MI430 product, in new supercomputers.

GB300 NVL72 MLCommons MLPerf Test Report

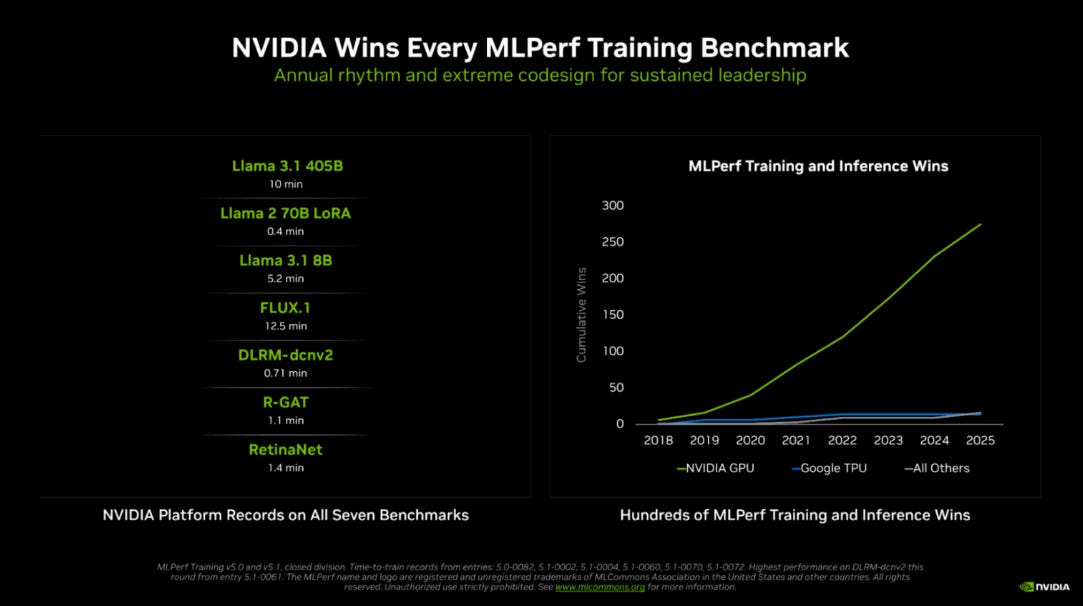

It’s no surprise and widely expected that it won across all model benchmarks. We also noted a few other points:

Low data precision can accelerate model computation and reduce memory requirements. Roughly speaking, FP8 is twice as fast as BF16, and FP4 is twice as fast as FP8. However, this comes with the drawback of reduced accuracy. Therefore, model pre-training is currently quantized to FP8 at most, and model inference to FP4 at most.

First, an explanation of data precision:

Simply put, FP4 and FP8 refer to “Floating-Point” (FP) data formats. The numbers 4 and 8 mean the format uses only 4 or 8 “bits” to store a single number.

They are “low-precision” formats, primarily aimed at achieving extreme performance, reducing memory footprint, and lowering power consumption in AI computing.

Why use FP4 and FP8?

As AI models (especially Large Language Models, LLMs) become larger, using FP32 or FP16 encounters two main bottlenecks:

Memory Wall: The massive model parameters (weights) cannot all fit into the GPU’s memory (VRAM), leading to the need for expensive hardware or extremely slow data swapping.

Compute Speed: Processing 32-bit or 16-bit operations ultimately has its physical limits.

NVIDIA’s improved variant 4-bit precision quantization format, NVFP4, performs better than the industry-standard FP4 and the variant MXFP4. Previously used for inference, this has now been confirmed for “pre-training” as well.

NVIDIA used NVFP4 for model “pre-training” for the first time, which was validated by MLPerf (previously announced on the company’s official blog). Accuracy only drops significantly after 0.8T tokens. This is likely the first large-scale model demonstration of FP4 pre-training, which is a breakthrough. Using 5,000 GB200s, pre-training for Llama 3.1 405B takes only 10 minutes.

The above refers to pre-training, not inference. As for inference, there is no doubt FP4 will become mainstream, as its effectiveness is on par with FP8.

We agree that Google’s massive-scale TPU pods (over 9,000 TPUs, though internal communication details are unclear) are a significant advantage. However, I am personally skeptical of the argument that TPUs offer a better TCO (Total Cost of Ownership) for AI inference. This is because, with the advancement of FP4 inference technology and its widespread adoption this year and next, TPU hardware still only supports up to FP8, with no native FP4 hardware support. This means it must compete with NVIDIA Blackwell, which can cut costs by half using FP4.

The Blackwell Ultra (B300) isn’t just about the NVFP4 innovation. In terms of hardware, compared to the B200, its circuit design sacrifices FP64 to boost FP4 capabilities by 50%. Therefore, it has:

(1) NVPF4 data precision + (2) 50% more hardware FP4 circuitry than the Blackwell (B200).

We are currently skeptical as to whether Google TPU can achieve a cost advantage in the AI inference market (vs. the GB300 NVL72).

What I Read

Transcript

CoreWeave Earnings Call Transcript

Nebius Earnings Call Transcript

AMAT Earnings Call Transcript

Books