Weekly Shots of Insight and Market #10

AEHR:Wafer-level Burn-in will rise ; NVIDIA Enters Storage Servers

Life

Last week, my wife and I took a trip to Miami. This is arguably the most comfortable season to visit—perfect for escaping the winter chill and soaking up the sun on South Beach.

You can really feel that the city is booming, particularly in terms of finance and infrastructure. It has effectively become the “Wall Street of the South,” a movement largely spearheaded by Ken Griffin moving Citadel’s headquarters here. Other major players like Thoma Bravo, Point72 Asset Management, and Elliott Management have all established a presence following suit.

The Latin American influence is massive here; it feels like the unofficial capital of Latin America. You can honestly get by without speaking English, as Spanish seems to be the primary language on the streets.

However, my top recommendation has to be Sanguich. They cure their own ham and make a special Mojo sauce. Every bite is an explosion of savory juices, yet it’s never cloyingly greasy. I highly recommend it!

Market

AEHR:

Following AEHR's financial results and earnings call this week, we view this as a company that warrants close attention.

The Case for AEHR: The primary reason to watch AEHR is the surging market demand for Burn-in equipment. This demand is driven by three core factors: chips have become too expensive, too complex, and failure is absolutely not an option (mission-critical). In the past, burn-in might have been a sampling process for certain products. However, for today’s high-end applications, it has evolved into a mandatory “100% inspection” step.

AEHR is a Leader in Burn-in: There are surprisingly few participants in this space because the required know-how is incredibly specialized. While traditional Japanese manufacturers (such as Espec and Shinwa) provide environmental stress test equipment, they tend to focus on general ICs or automotive parts. They often cannot match AEHR’s capabilities in large-scale Wafer-Level Burn-in.

Concentration Profile: AEHR is a “pure-play” company focused almost exclusively on the niche burn-in market. It does not have diversified business lines to spread risk.

Two Main Product Lines:

Wafer-Level Burn-In (WLBI): Centered on the FOX Series (FOX-XP / FOX-NP / FOX-CP), combined with their proprietary WaferPak (full-wafer contactors) and the WaferPak Aligner for automation. These are used for burn-in, stabilization, and testing at the wafer or singulated die/module stage.

Packaged-Part Burn-In (PPBI): Expanded through the acquisition of Incal, this line includes Sonoma (ultra-high power), Tahoe (medium power), and Echo (low power, high parallelism) systems. These target post-packaging reliability demands in AI/HPC, data centers, and mixed-signal markets.

Here is the translation, formatted for a professional investment report or newsletter.

Financials & Earnings Call Highlights

Financial Data:

Sluggish Revenue and Earnings: Q2 revenue was $9.9 million, a 27% decrease year-over-year. The primary cause was a decline in WaferPak (consumables) shipments. Gross margin also dropped from 45.3% in the same period last year to 29.8%, driven by an unfavorable product mix (a lower percentage of high-margin consumables).

Bookings are the Core Highlight: Despite soft revenue this quarter, the company secured $6.5 million in new orders within the first six weeks of Q3, bringing the effective backlog to $18.3 million. The CEO emphasized that bookings for the second half of Fiscal Year 2026 are expected to reach $60 million to $80 million. The majority of this is for AI processor burn-in at both the wafer and package levels. This figure far exceeds revenue projections and serves as a key leading indicator for future growth.

“As a result, we’re reinstating guidance for the second half of fiscal ‘26. For the second half of fiscal ‘26, which began November 29, ‘25, and ends this May 29 of ‘26, Aehr expects revenue between $25 million and $30 million dollars. As stated earlier, although we’re not providing formal bookings guidance based on customer forecasts recently provided to Aehr, we believe our bookings in the second half of this fiscal year will be much higher than revenue, between $60 million and $80 million dollars in bookings, which would set the stage for a very strong fiscal ‘27 that begins on May 30, 2026.” -Gayn Erickson (Chief Executive Officer, Director)

New Customer Activity: Since the last earnings call, two additional AI processor companies have initiated plans for wafer-level benchmark evaluations.

Flash Memory Milestone: Just before the Christmas holiday, the company completed a wafer-level benchmark test with a leading global NAND flash memory manufacturer, preparing for future HBF (High Bandwidth Flash) production.

Conference Call Key Takeaways

1. AI Momentum & Market Potential Institutional investors are highly focused on the composition and validity of the $60M–$80M booking guidance for H2 FY2026.

Order Composition: Management confirmed that the vast majority of these substantial orders come from AI processors (covering both wafer-level and package-level testing), while the share of Silicon Carbide (SiC) is extremely low.

Single Client Potential: When asked if the AI market is truly larger than SiC, the company replied that a single large AI client’s system requirements could reach 20–30 units (at $4M–$5M per unit). The AI business scale could reach hundreds of millions of dollars in the coming years.

2027 Outlook: These orders are expected to come in at the end of FY2026 and convert into revenue starting in Q1 FY2027 (June 2026), underpinning expectations for strong growth.

2. Technology Path: Wafer-level vs. Package-level Competition & Synergy

Benchmark Delays: The company addressed why AI wafer-level testing progress has been slower than expected. Clients, accustomed to package-level testing, need to adjust their DFT (Design for Test) rules and test vectors when shifting to wafer-level. This “learning curve” caused delays of several weeks to months.

Coexistence: Management emphasized that package-level systems (Sonoma) and wafer-level systems (Fox) will coexist long-term. Package-level is suitable for initial qualification (HTOL), while wafer-level testing offers massive yield savings in the production phase by preventing the scrapping of expensive HBM (High Bandwidth Memory) and substrates.

Sonoma’s Strategic Position: The Sonoma system is currently the “preferred standard” for AI clients. Its 2,000-Watt ultra-high power testing capability is unique in the market and allows for a seamless transition to automated production.

3. Specific Market Progress: Flash & Photonics

High Bandwidth Flash (HBF): Regarding NAND Flash benchmarks, clients are shifting from traditional enterprise SSDs to HBF optimized for AI workloads. This requires higher power consumption, playing directly into Aehr’s technical strengths.

Silicon Photonics: Although volume production schedules for leading clients have been pushed to FY2027, this aligns with the launch cadence of new AI platforms.

4. Capacity & Operational Efficiency Given the revenue dip, attention turned to the company’s ability to handle future explosive order growth.

Doubling Capacity: Through facility renovations, capacity has increased 5x, with the current ability to deliver over 20 systems per month.

Consumables & Margins: The drop in gross margin was explained by a temporary decrease in the shipment ratio of high-margin WaferPaks. As the installed base of systems rises, consumable revenue is expected to rebound.

5. Competitive Landscape Currently, AEHR is the only player in wafer-level burn-in testing. Most market data is based on package-level testing. As clients begin migrating toward wafer-level burn-in, AEHR occupies a critical position.

“So as we get our arms around the market, the market data that would be out there would be packaged-part because no one’s doing wafer level except for us, and so we’re creating our own models related to, okay, for that unit capacity, if you went to wafer-level burn-in, what would that look like? Kind of similar to what we had to go through in the original silicon carbide side of things of the whole market, and we’re not sitting here, everybody included Nvidia and Google and Microsoft and Tesla and these guys all went with us.”-Gayn Erickson (Chief Executive Officer, Director)

Insightology View

We believe the wafer-level Burn-in market represents a new wave of demand in semiconductor testing. As power consumption continues to rise, AEHR is undeniably situated in a critical position to capitalize on this shift. Furthermore, we believe the acceleration in demand for the broader testing industry will sustain.

Watch List:

AEHR (USA)

TER (Teradyne - USA)

Chroma (TW)

Hon. Precision (TW)

Advantest (JP)

ASE (TW)

NVIDIA Enters Storage Servers: The Inference Context Memory Storage Platform (ICMS)

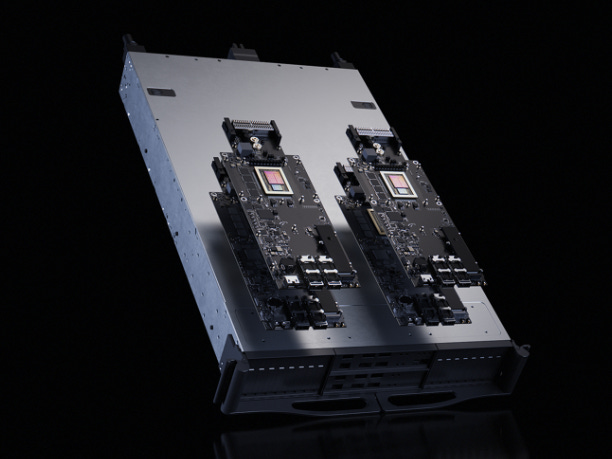

NVIDIA CEO Jensen Huang unveiled the “NVIDIA Inference Context Memory Storage Platform (ICMS)” during his keynote, marking NVIDIA’s official entry into the storage server domain.

The core purpose of ICMS is to alleviate the burden of the “KV Cache” (Key-Value Cache) generated by Large Language Models (LLMs) during AI inference.

The Problem: KV Cache acts as the AI model’s “short-term memory” and excessively consumes precious GPU High Bandwidth Memory (HBM) capacity.

The Solution: The new architecture offloads this data to storage servers and retrieves it only when needed, thereby freeing up GPU HBM space. This is expected to resolve the recent “degradation” issues (where ChatGPT seems to get “dumber”) caused by user surges at OpenAI.

The Shift to SSDs: In the AI training era, market attention was solely on HBM crowding out DRAM. With NVIDIA’s entry into storage servers, it declares that in the AI inference era, SSDs will play a far more critical role than before. Previously, inference relied on DRAM/HBM. The future model will be “Inference using DRAM/HBM + NVMe SSD simultaneously,” and behind those SSDs lies NAND technology.

Rack Architecture & The Changing Role of the DPU

Rack Configuration: The top two trays of each NVIDIA ICMS rack will house 8 Bluefield-4 DPU boards, while all trays below will be populated with SSDs.

DPU: From Supporting Actor to Lead: Unlike the previous NVIDIA GB300 NVL 72 racks where the Bluefield-3 DPU was merely optional, in the ICMS rack, the Bluefield-4 DPU plays the critical role of managing all SSDs. This signifies a shift where the DPU moves from a peripheral component to a central, leading role.

Note: The Bluefield-4 DPU board integrates the Grace CPU and DPU into a single package.

Supply Chain Details:

LPDDR5X: Supplied by Micron.

SSD Controller: Supplied by SanDisk.

BMC: Each DPU board is equipped with an ASPEED AST2600 BMC.

Insightology View

1. NAND/SSD is the True Structural Beneficiary This is the most significant impact. We are seeing several structural changes:

KV Cache is moving from “cold data” to “quasi-hot data.”

Latency is no longer just a storage issue; it is now an inference KPI.

Capacity is no longer just a cost center; it is a throughput multiplier.

2. Changing Metrics What is the result? SSD metrics are shifting from “TB per Dollar” to “KV per Rack / Latency Bound.” Demand will pivot from “capacity-oriented” to “Performance × Latency × Network Affinity” oriented. This creates a highly favorable environment for high-capacity, enterprise-grade, and high-endurance SSDs.

3. Industrial Tech Meets AI We believe industrial-grade SSD technology is well-suited for the high-value AI Server/Data Center market. However, many of these are not “new architectures” or “new technologies” born purely for AI; they are existing technologies in the industrial sector (such as pSLC—simulating SLC using MLC or TLC NAND) that have been mature and widely used for years.

4. NVIDIA’s Strategic Triad From a hardware perspective, this is a storage architecture adjustment. But from NVIDIA’s perspective, three things are happening simultaneously:

Inference efficiency improvements increase GPU ROI.

BlueField-4 becomes a mandatory component for AI factories, driving DPU volume.

Storage architecture becomes locked in by NVIDIA’s software stack (via NVIDIA DOCA™, NVIDIA NIXL, NVIDIA Dynamo).

Essentially, this will compel more non-hyperscaler customers to rely on NVIDIA’s turnkey solutions rather than cobbling together their own storage, network, and software stacks.